Hallucinating Art Models, Sound Design in Python, and a little Canvas

#050 - Creative Coding / Generative Arts Weekly

Website | Instagram | Youtube | Behance | Twitter | BuyMeACoffee

Make things happen, Don't let things happen to you. -Syed Sharukh

Hello, creatives!

I’ve been spending quite a bit of time learning a bunch of technologies in the last year to continue stepping up the various skills needed to try what I have in my mind. Much of it involves real-life materials but uses generative techniques to drive it, so I hope to share it in the future.

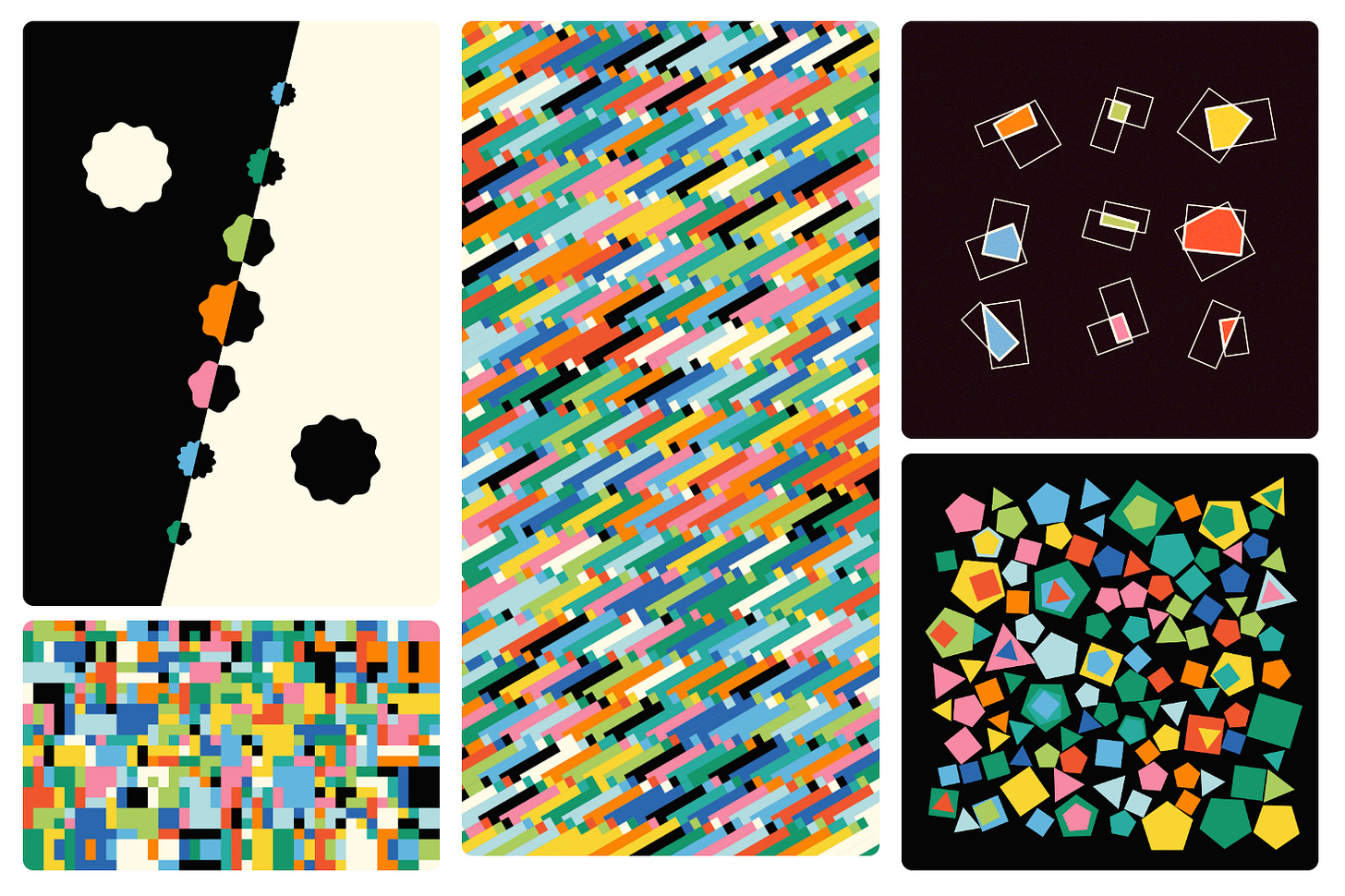

Another thing I had been working on was getting into using the canvas API (as opposed to p5js or something similar); I wrote a little tutorial while I was working with it and thought I might share it with you all. It’s plain, but it helped me find some of the bearings using Observable. Of course, all of the code is hidden when you first look at it, but each of the graphics (look for the ▷ on the side) provides the code to study, and I’ve added a few tips as I was writing. Hopes it’s beneficial if you haven’t worked with canvas.

Have a great week!

Chris

🖌️ Unconventional Media

I embarked on a year-long journey to find a way to print a 5-piece fashion collection as part of my graduate collection at Shenkar. Using soft materials and flexible patterns, I printed this collection at home.

I have a particular spot in my heart for 3D printed materials as it is another medium of creativity. Though several years old, it shows how interesting new mediums can provide alternative avenues of creation and generation.

🎵 Generative Sound

Drop the Daw: Sound Design in Python

The above tutorial uses Python to provide an alternative solution to the more common C++ libraries, which is understandable for the virtual instrument but not for quick iteration. Nevertheless, the presentation is a solid first start in sound design using Python.

🎨 AI Art

Hallucinating with art models

Wow, long time, no posts! Anyway, about them text-to-art generative models going about, eh? Surprising nobody: I am extremely into them. I’ve been using DALL-E and MidJourney since they came out, and even though tons has been written about them, I wanted to give a slightly different overview: the perspective of someone who isn’t interested that much in their realism skills.

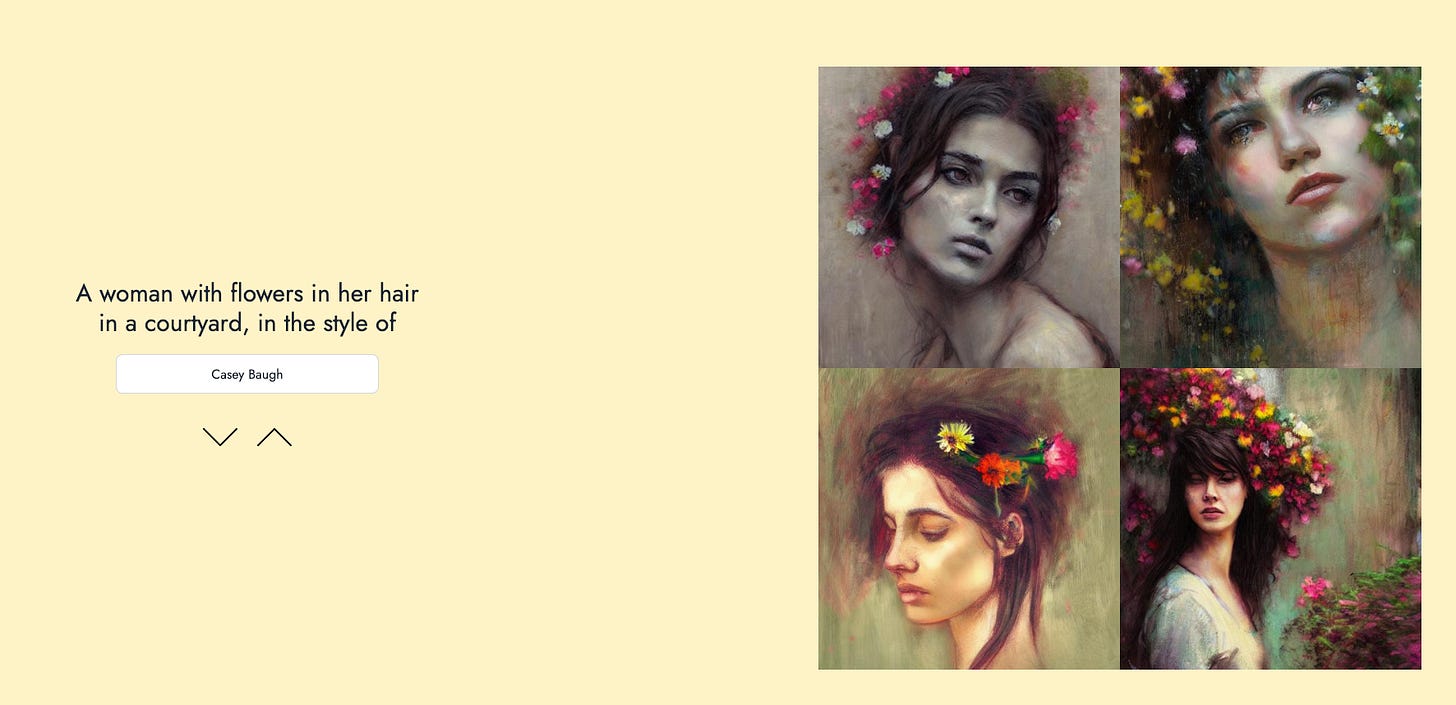

KREA

KREA is a tool to help find unique prompts for Stable Diffusion. Essentially, it is a search engine for stable diffusion prompts, allowing users to find a particular aesthetic generated by deep learning. It’s worthwhile to try if you haven’t played with it yet.

And if you want to quickly browse how asking the model about the style of the image.. this is an elegant small tool that gives you the work here.

I find these sites to be great for inspiration and the many creative ways one might discover exciting ways to generate interesting images as they explore the model and its potential. Also, if you have an M1 Mac and want to play with the model, Charl-e is an excellent piece of software you can use and works quite well.

Dreamfusion: Text-to-3D using 2D Diffusion

Recent breakthroughs in text-to-image synthesis have been driven by diffusion models trained on billions of image-text pairs. Adapting this approach to 3D synthesis would require large-scale datasets of labeled 3D data and efficient architectures for denoising 3D data, neither of which currently exist. In this work, we circumvent these limitations by using a pretrained 2D text-to-image diffusion model to perform text-to-3D synthesis. We introduce a loss based on probability density distillation that enables the use of a 2D diffusion model as a prior for optimization of a parametric image generator. Using this loss in a DeepDream-like procedure, we optimize a randomly-initialized 3D model (a Neural Radiance Field, or NeRF) via gradient descent such that its 2D renderings from random angles achieve a low loss. The resulting 3D model of the given text can be viewed from any angle, relit by arbitrary illumination, or composited into any 3D environment. Our approach requires no 3D training data and no modifications to the image diffusion model, demonstrating the effectiveness of pretrained image diffusion models as priors.

🪛 Techniques

An Algorithm for Polygon Intersections

This article initially began as a short post discussing strategies that can determine rectangle intersections and collisions, for both axis-aligned rectangles as well as arbitrarily rotated rectangles. While working on a recent project however, I discovered that this intersection test can be extended to not only return the polygonal shape that is formed by the intersection of two non-axis aligned rectangles but also that of two convex polygons. Hence I extended the post to include this information as well. By GorillaSun

📚Books

Machine Hallucinations: Architecture and Artificial Intelligence

Not to be confused with the work of Refik Anadol, This is volume 92 of Architectural Design, and I found this to be an inspirational piece of using Deep Learning techniques to generate images and textures to be used in the domain of architecture. But there are several articles inside that feature the likes of Sofia Crespo in “Augmenting Digital Nature,” Mario Klingemann, and others.

hey chris - do you know lionel radisson's extensive observable creative coding guides and libs? might be of interest :) https://observablehq.com/@makio135?tab=collections