Procedural Jellyfish, Slang Shaders, and Generative vs Generative AI

#088 Creative Coding / Generative Arts Weekly

In the Age of Ideas the barrier to entry exists more in our minds than it does in the real world. - Alan Philips

Hi All!

Yes it’s been a little bit. 👋

Welcome, new subscribers! It’s wonderful to see you all finding value in this newsletter, whether you're here to learn, relearn, or discover new techniques.

I’ve closely followed developments in machine learning and deep learning for several years. In fact, these fields sparked my initial interest in data science, as I was fascinated by the tools that could themselves learn and adapt.

At the same time, I'm also an artist. For a long time, these two interests existed separately for me. When Google introduced DeepDream around 2015-2016, I began closely tracking its progress. However, early implementations like DeepDream and initial explorations into transfer learning felt distinctively removed from traditional artistic methods.

Interestingly, over the past three years, I've found myself increasingly caught between my creative instincts as an artist and my analytical interests as a data scientist. This tension—this sense of internal conflict—has sparked curiosity in me.

In the upcoming newsletters, I'll be exploring this tension more deeply: why does it exist, and is it justified? Additionally, I believe it’s valuable to document how artistic inquiry in these realms differs from traditional practices. Both fields require unique skill sets, yet instead of mastering physical materials like brushes and paint, one must grasp mathematics and programming paradigms—proxies for traditional artistic tools.

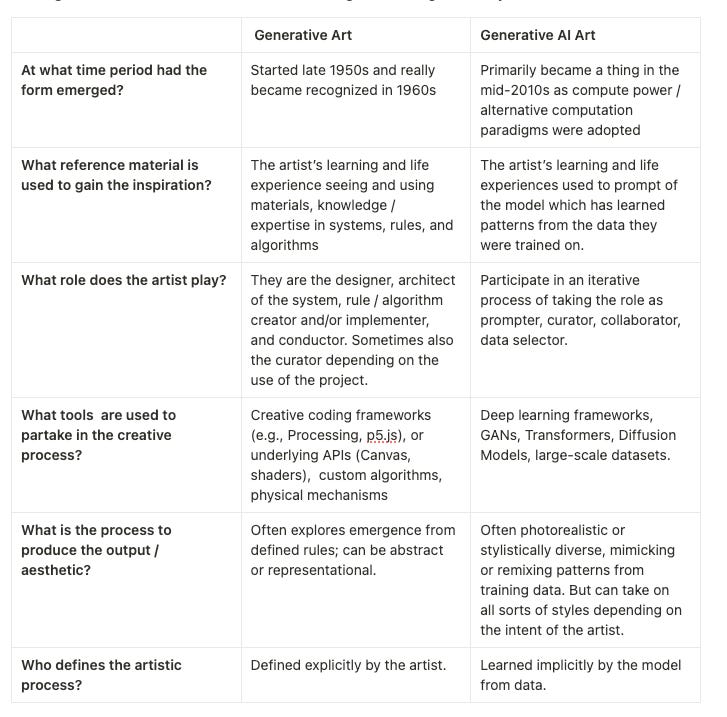

To start, I've assembled a set of questions designed to clarify these differences. Using these questions, I've created a chart to help track and better understand how these technologies diverge and intersect. I look forward to sharing these insights with you.

These questions are going to be the basis on which I write further. But I’ve been coming up with other questions to ask when trying to identify these differences.

What is the artist’s relationship to unpredictability or randomness in the creative process?

What does the audience perceive the role of the artist?

Where is the boundary between human and machine agency?

What technical limitations shape artistic choices?

How is originality defined and maintained within either art form?

Of course these are much more philosophical questions but the importance to understanding intent is an element to consider as much as the aesthetical or

Well I hope you have a wonderful evening or morning. Oh and also a great start to your week!

Chris

Tutorials & Articles

Aurelia – Procedural Jellyfish

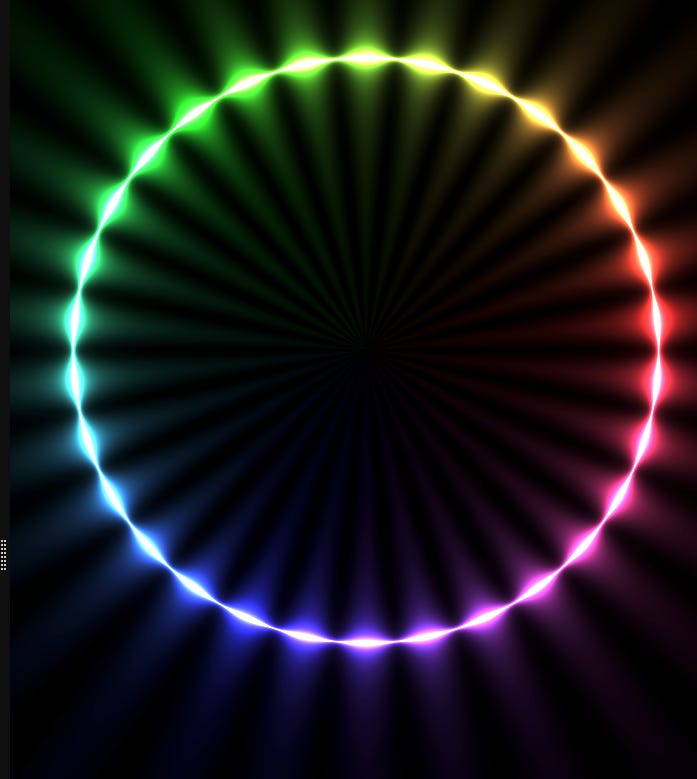

A sibling project from the same author (softbody) , Aurelia synthesizes ethereal jellyfish by sinusoidally contracting a hemisphere “bell” mesh while GPU-based verlet chains animate tentacles. All textures, volumetric lighting, and even the pulsing iridescence are generated on-the-fly in GLSL/TSL shaders, making it a textbook example of fully procedural creatures rendered via WebGPU.

Tutorials & Articles

Claude + Blender

IA new tutorial shows how to bridge Claude Sonnet with Blender via the open-standard Model Context Protocol (MCP), using Siddharth Ahuja’s open-source Blender-MCP add-on. Over 15 minutes the presenter demonstrates three workflow wins—voice-driven procedural shoe design, hands-free scene housekeeping, and instant pipeline plug-ins—before finishing with a hands-on installation guide.

I am of two minds, on the one hand I don’t care that people don’t have to work as hard as in the past to get interesting results. Yet at the same time I’m excited to know that more tooling has been placed into the hands of people. It is counter intuitive, yet to think that anyone could actually model something is exciting. The process here is quite interesting; we continue to see new interfaces that will bring either more creativity to the table.

It will certainly be interesting to watch into the future.

The Slang Shading Language and Compiler

Slang is an open-source, next-generation shading language that slots in as a superset of HLSL/GLSL while adding modern luxuries such as true modularity, multiple back-end targets (D3D12, Vulkan, Metal, CUDA, even WebGPU) and built-in automatic differentiation for neural-graphics workflows. The project recently moved under Khronos-Group governance and is leaning hard into “shader-as-ML-kernel” use-cases—complete with PyTorch integration—making it a compelling bridge between traditional real-time rendering and differentiable graphics research.

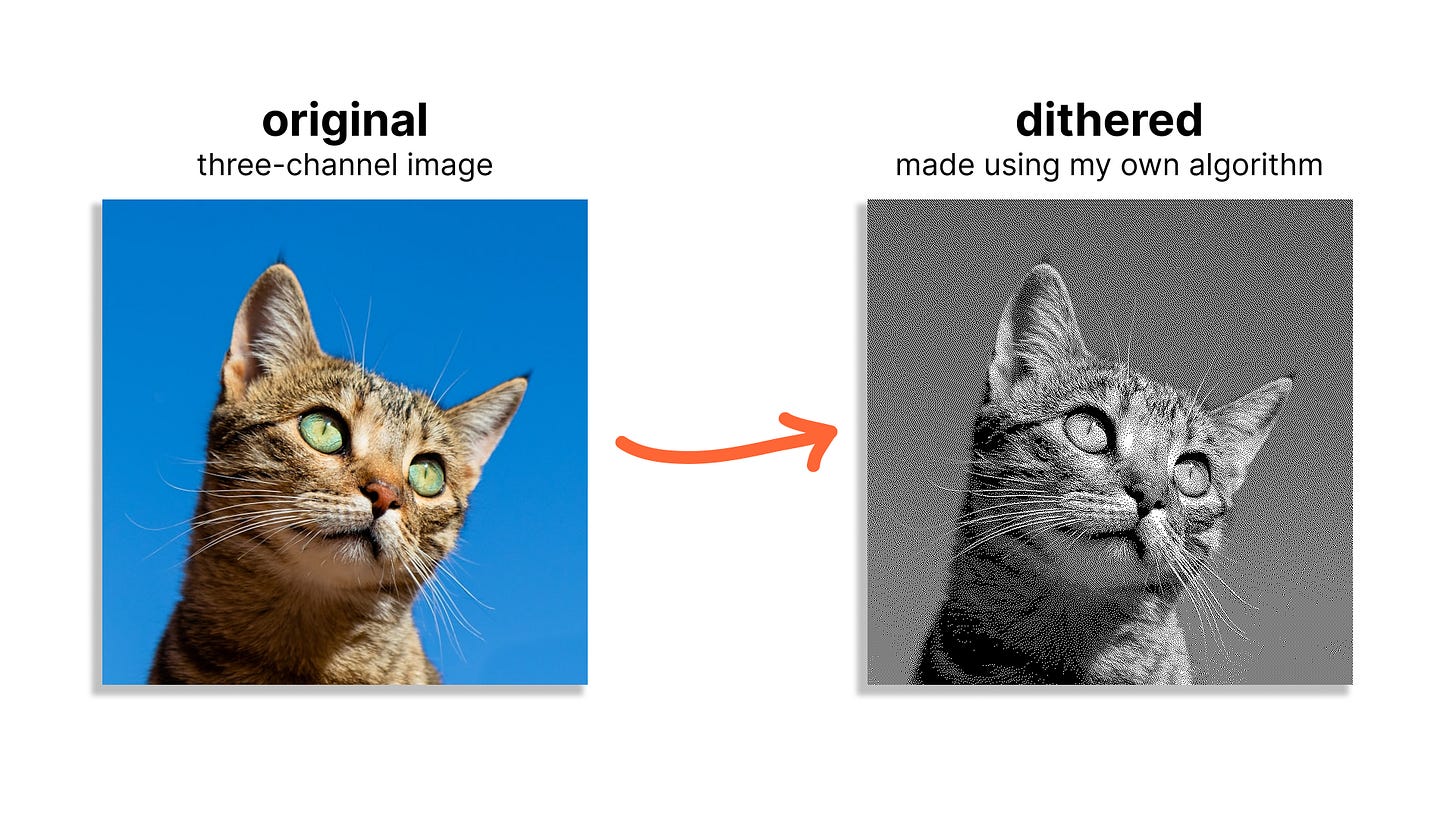

Writing My Own Dithering Algorithm in Racket

Amanvir Parhar set out to drive a tiny thermal printer called Guten and ended up hand-rolling a brand-new error-diffusion algorithm in Racket. The post walks through grayscale conversion, experiments with Atkinson vs. Floyd-Steinberg, and culminates in a custom kernel that balances speed and aesthetic fidelity—complete with side-by-side Taj Mahal comparisons and full source on GitHub. It’s a fun deep dive into image-processing math illustrated by lots of code snippets and cat photos.

These are always classic algorithms to try and implement. I enjoy seeing it done in different languages but really its the process of getting into the weeks of truly understanding.

Radiance Fields and the Future of Generative Media

In this SIGGRAPH-style talk, a UC-Berkeley research group surveys the explosive progress in Neural Radiance Fields: from NeRF’s original view-synthesis breakthrough to 3D Gaussian Splatting and real-time, text-conditioned scene generation. The speaker argues that radiance-field representations will do for 3-D content what latent diffusion did for images—unlocking end-to-end generative pipelines that treat geometry, appearance, and even physics as optimizable continuums.

Here is a good overview of radiance fields and just a good overview of what the current trends in generative media look like.

Surface-Stable Fractal Dithering Explained

Rune Johansen (of Return of the Obra Dinn fame) unveils a new 1-bit shading trick that deposits ordered-dither “dots” directly in a model’s UV space, yielding patterns that cling to surfaces instead of swimming across pixels. The video demystifies the maths behind the fractal noise look-up, shows Unity demos, and releases the shader under an open-source license for anyone chasing retro-Mono-chrome aesthetics without temporal crawl.

softbodies – Real-time Soft-Body Simulation in the Browser

This WebGPU + Three.js repo demonstrates true volumetric soft-body deformation and self-collision entirely in-browser. A tetrahedral mass-spring lattice runs in compute shaders, while Three.js’s new WebGPURenderer handles draw calls. The README links to a live demo where squishy blobs bounce off each other at 60 fps—showcasing just how far WebGPU has matured for physics-heavy generative art.

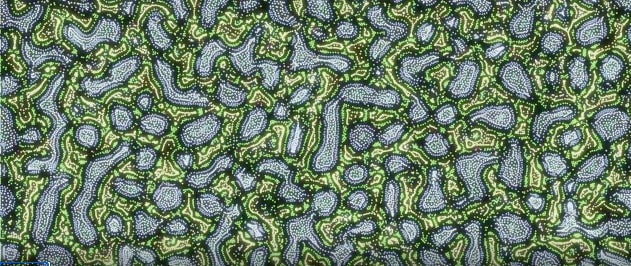

Particle Life Simulation in Browser using WebGPU

Ever wondered what happens when you throw out Newton’s third law? This blog ports the viral “Particle Life” model—where asymmetric forces cause predator-prey-style swarms—to the browser. Leveraging compute shaders, thousands of particles interact in real time with adjustable attraction/repulsion matrices, friction, and boundary conditions. The post is equal parts performance diary and playground link, inviting readers to tweak parameters and watch emergent “organisms” evolve.

Having Fun with POPS in TouchDesigner

TouchDesigner’s brand-new Point Operators (POPs) form a GPU-accelerated node family that manipulates points, particles, point-clouds, line-strips and more, folding the strengths of SOPs, CHOPs and TOPs into one modern workflow. n this 38-minute walkthrough the presenter tours core POP